IBExpert Documentation

IBExpert is a professional Integrated Development Environment (IDE) for the development and administration of InterBase and Firebird databases.

If you prefer a hard copy of this documentation, please register, including full address details, at the IBExpert Download Center. You can then use your registration e-mail and password to download either directly from the Download Center or use them to access http://www.h-k.de/docu/ where, at both locations, you can download the complete IBExpert documentation as a PDF file. Individual chapters in this online documentation may be selected and printed by clicking the Print menu item in the top right-hand corner of this window. Important: please read our copyright conditions!

Getting started

In order to start working and developing with IBExpert, it is necessary to take the following steps:

- Download and install Firebird (Open Source database). Alternatively you may also, of course, install InterBase®.

- Download and install IBExpert (Personal, Trial or Customer edition)

- Registering a database (the example uses the

EMPLOYEE database supplied with Firebird and InterBase)

- Working with a database (based on the

EMPLOYEE sample database).

- IBExpert Screen: get acquainted with IBExpert and how it's set up.

- Where to go from here: if you're just starting out, take the time to read through the documentation sources listed in this section.

Download and install Firebird

Firebird is renowned for its ease of installation and administration. Even an inexperienced user can download and install Firebird using the Installer, with just a number of mouse clicks. If you are totally new to Firebird, please first read the chapter, Server versions and differences to help you decide which Firebird version you need.

The current Firebird version can be downloaded free of charge from http://firebirdsql.org subject to Open Source conditions. Alternatively, use the IBExpert Help menu item Download Firebird to directly access the download website.

Simply click the DOWNLOAD tab and select All Released Packages (Source Forge). The download packages come in a variety of options according to: server type (Classic, SuperServer and Embedded), server version, platform, and incorporating the Installer or as a ZIP file.

Scroll down to the latest file releases and click DOWNLOAD to the right of the version for your platform, for example Firebird releases for Windows and Linux (most current version in March 2008 is Firebird 2.1 RC1 from January 23rd, 2008). Please refer to Posix Platforms and Windows Platforms for further information for individual platforms with regard to download and installation.

If you are new to Firebird, then go for a version using the Installer. The Zip kit is for manual, custom installs of Classic or Superserver.

A new window appears:

Click on the green Download button to the right of the Firebird file you require. Select the file(s) you wish to download:

If required, select a download server:

Specify drive and path for the download file and save.

Before you proceed with the installation (either using the Firebird Installer or manually from the ZIP file), please ensure first that there is no Firebird server already running on the machine you are about to install onto.

Installation using the Firebird Installer

Now double-click the downloaded firebird file to start the installation. Again, please refer to Windows Platforms and Posix Platforms for installation details for the various platforms.

Read and accept the Firebird License Agreement, before proceeding further.

Specify the drive and path where you wish the Firebird server to be installed. Please note that the Firebird server, along with any databases you create or connect to, must reside on a hard drive that is physically connected to the host machine. It is not possible to locate components of the server or database on a mapped drive, a file system share or a network file system.

The Firebird server must be installed on the target computer. In the case of the Embedded Server version the client library is embedded in the server, this combination performing the work of both client and server for a single attached application.

Then select the components you wish to install. If you are still fairly new to Firebird, select the default option, Full installation of Server and development tools, checking the Classic or SuperServer option as wished.

After confirming or altering the Start Menu folder name (or checking the Don't create a Start Menu folder box), you arrive at the Check Additional Tasks dialog:

The Firebird Guardian: The Firebird Guardian is a monitoring utility that does nothing other than check whether the Firebird server is running or not. Nowadays it is not really necessary on modern Windows systems, as it is possible to restart the Firebird service, should it cease to run for any reason, using the operating system. Use the Windows Services (Restore page) to specify that every time the Firebird service stops, it should be restarted. When the service is halted, the restart can be viewed in the Windows Event Log.

However if the server does go down, it's important to find out what caused it. The logs need checking to trace page corruption and an immediate decision needs to be made right there and then, whether to regress backwards or move forwards. An automatic restart automatically leads to more crashes and more corruption, until the problem is noticed and causes analyzed and repaired. So consider carefully, whether you wish to have the Guardian running in the background on your database server or not.

Further parameter check options include the following:

- Run the Firebird server as an application or service.

- Start Firebird automatically every time you boot up: recommended.

- "Install Control Panel Applet": Windows Vista CAUTION If you are installing onto Windows Vista, the installer option to install the Control Panel applet must be DISABLED to avoid having it break the Control Panel on your Vista system.

- Copy Firebird client library to <system> directory: care needs to be taken here if there is more than one instance of Firebird running on the server. If the

fbclient.dll is simply overwritten, it can cause problems for the Firebird server that is already installed and running. Instead of copying to the \system directory, simply move it to your application directory.

- Generate client library as GDS32.DLL for legacy app. support: Many programs, including for example older Delphi versions, rely on a direct access using this file name. This option can be checked to copy the file under the old name.

Should problems be encountered during installation, please refer to the Firebird Information file.

The IBExpertInstanceManager service creates a replacement for the Firebird Guardian, which is important if you have more than one Firebird/InterBase server installed, because the Firebird Guardian only works with the Firebird default instance. Please refer to the IBExpertInstanceManager documentation for further information.

Windows platforms

On Windows server platforms - Windows NT, 2000 and XP, the Firebird service is started upon completion of the installation. It starts automatically every time the server is booted up.

The non-server Windows platforms, Windows 95, 98 and ME, do not support services. The installation starts the Firebird server as an application, protected by another application known as the Guardian. Should the server application terminate abnormally, the Guardian will attempt to restart it.

Posix platforms

As there may be significant variations from release to release of any Posix operating system, especially the open source one, it is important to read the release notes pertaining to the Firebird version to be installed. These can be downloaded from the Download page at http://firebird.sourceforge.net or viewed here:

Please also refer to Firebird 2 Migration & Installation: Installing on POSIX platforms and consult the appropriate platform documentation, if you have a Linux distribution supporting rpm installs, for instructions about using the RedHat Package Manager. Most distributions offer the choice of performing the install from a command shell or through a GUI interface.

For Linux distributions that cannot process rpm programs, use the .tar.gz kit. Again instructions are included in the release notes (see above link).

Shell scripts have been provided, but in some cases, the release notes may advise modification of the scripts as well as some manual adjustments.

ZIP installation

Another way to install Firebird is from a ZIP file. This method is more flexible for embedded installations. Download the appropiate ZIP file from the Firebird Download site, following the directions at the beginning of this chapter. This ZIP file basically contains the complete installation structure.

It includes a pretty much "pre-installed" server, which you can simply copy to any directory as wished, and which you can integrate into your installation by simply calling batch files. Simply start the install_classic.bat or install_super.bat, depending upon which server you wish to install:

The instreg utility does all the work, making the necessary entries in the right places, and installs everything required in the Registration. It usually installs the Firebird Guardian too, and finally starts the service.

This is the ideal solution for development applications which are being passed onto customers: simply pack the complete Firebird ZIP directory in with your application, so that when you call your Installer, the only work necessary is to call the appropiate batch file.

Performing a client-only install

Each remote client machine needs the client library that matches the release version of the Firebird server: libgds.so on Posix clients; gds32.dll on Windows clients.

Firebird versions from 1.5 onward require an additional client library, libfb.so or fb32.dll, which contains the full library. In these newer distributions, the "gds"-named files are distributed to maintain compatibility with third-party products which require these files. Internally, the libraries jump to the correct access points in the renamed libraries.

Also needed for the client-only install:

Windows

If you want to run Windows clients to a Linux or other Posix Firebird server, you need to download the full Windows installation kit corresponding to the version of Firebird server installed on the Linux or other server machine.

Simply run the installation program, as if you were going to install the server, selecting the CLIENT ONLY option in the Install menu.

Linux and some other Posix clients

Some Posix flavors, even within the Linux constellation, have somewhat idiosyncratic requirements for file system locations. For these reasons, not all *x distributions for Firebird even contain a client-only install option.

For the majority, the following procedure is suggested for Firebird versions lower than 1.5. Log in as root for this.

- Search for

libgds.so.0 in /opt/interbase/lib on the machine where the Firebird server is installed, and copy it to /usr/lib on the client.

- Create the symlink

libgds.so or it, using the following command: ln -s /usr/lib/libgds.so.0 /usr/lib/libgds.so

- Copy the

interbase.msg file to /opt/interbase.

- In the system-wide default shell profile, or using

setenv() from a shell, create the INTERBASE environment variable and point it to /opt/interbase, to enable the API routines to locate the messages.

Excerpts of this article have been taken from the IBPhoenix "Firebird Quick Start Guide". Many thanks to Paul Beach (http://www.ibphoenix.com)!

Performing a minimum Firebird 1.5 client install

By Stefan Heymann (April 11th 2004)

This article describes how to run Firebird 1.5 based applications with the absolute minimum client installation required.

What you need

Your application needs access to the Firebird client library, fbclient.dll. The easiest way to do this is to put fbclient.dll in the same directory as your application's .exe file.

fbclient.dll needs access to two other DLLs: svcp60.dll and msvcrt.dll. Both are delivered together with the Windows installation of Firebird, so if you have a Firebird server installed on your development machine, you'll find these DLLs in the bin directory of your Firebird installation.

msvcrt.dll (Microsoft Visual C/C++ RunTime) is a part of Windows and resides in the Windows\System directory on Win9x machines and in Windows\System32 on NT-based machines (NT4, W2K, XP, 2003). On Windows 95 and Windows 98 machines, it's too old for the msvcp60.dll that fbclient.dll uses. So you'll have to replace the msvcrt.dll by the one that comes with Firebird (or even a newer one).

msvcp60.dll can stay in your application directory.

Your application directory now looks like this:

<YourApp>.exe and other application files

fbclient.dll

msvcp60.dll

That's it. Easy!

What you have to write to the registry

Nothing - there's nothing you'll have to do to the registry.

What you have to do to the Windows\System directory

Only on Windows 95 and Windows 98 "First Edition" machines: you will need to replace msvcrt.dll with the newer version that comes with Firebird 1.5 (if there isn't already a new version installed).

Some version numbers of msvcrt.dll:

| Windows 98 FE | 5.00.7128 | does NOT work |

| Windows 98 SE | 6.00.8397.0 | works |

| Firebird | 1.5.0 6.00.8797.0 | works |

| Windows XP SP1 | 7.0.2600.1106 | works |

What you have to do to your code (Delphi, IBObjects)

A "normal" InterBase access library uses gds32.dll as the client library. Firebird's client library is named fbclient.dll. If you use IBObjects ( http://www.ibobjects.com/), you can set another client library name.

- Include

IB_Constants.pas as the first unit in your USES clause.

- Put the following line in the

INITIALIZATION part of your Unit:

IB_Constants.IB_GDS32 := 'fbclient.dll';

- This line must be executed before the first database connect is performed.

Installing multiple instances with the Firebird Instance Manager

Pre-Firebird 2.1: If you already have a Firebird version installed on your machine, then you can subsequently rename the installation using the fbinst tool, which can be downloaded from: http://www.ibexpert.com/download/firebirdinstancemanager/. For example, rename your existing Firebird to MyFirebirdVersion and then install the new Firebird version without any problems. The Firebird Instance Manager was developed by Simon Carter. It isn't an IBExpert tool but it is extremely helpful if you find yourself in such a situation.

Since Firebird 2.1 the Installer offers the possibility to install multiple instances.

IBExpert introduced its own IBExpertInstanceManager as one of the HK-Software Services Control Center services in version 2008.08.08.

Install Firebird as an application

To run Firebird as an application, use the following parameter -a:

C:\Program Files\Firebird\Firebird_2_1\bin>fbserver a

This can, for example, be copied to any subdirectory of your application and controlled from the application so that when it starts, the Firebird server also starts. Furthermore you can, for example, directly specify the use of a different port. That way you just need to add the files to your individual setup, with the firebird.conf file port specification adjusted accordlingly. It is not advisable to use port 3050, the default Firebird port, because it is used by every other Firebird server. If you leave it on 3050 you may encounter problems if other Firebird installations are present.

When you are starting the Firebird server as an application, you do not need to install anything. Simply copy the data to the customer's workgroup server and start it from there.

See also:

Download Firebird / Purchase InterBase

Firebird License Agreement

Copy of Firebird Information File

FirebirdClassicServerVersusSuperServer

Firebird SQL

Firebird 2 Migration & Installation: Using the Firebird Installer

Installation

and the various Firebird documentation and articles found here: Documentation

Server versions and differences

Firebird is available for various platforms, the main ones are currently 32-bit Windows, Linux (i586 and higher, and x64 for Firebird 2.0 on Linux), Solaris (Sparc and Intel), HP-UX (PA-Risc), FreeBSD and MacOS X. Main development is done on Windows and Linux, so all new releases are usually offered first for these platforms, followed by other platforms after few days (or weeks).

There is also a choice of server architecture: Classic server or SuperServer. If you're not sure after reading this chapter, whether the Classic server or the SuperServer better meets your needs, then install the SuperServer.

Classic server

The Firebird Classic server offers multiple processes per connection and SMP (Symmetric Multi-Processing) support. Each connection uses one process. It supports multi-processor systems but no shared cache. I.e. each user connecting and requesting data, will have his/her data pages loaded into the cache, regardless of whether other users' request have already caused the server to load these pages. Which of course leads to a higher RAM necessity. However, as RAM and cache requirements are relevant to the size of the database file and the drive on which it is stored, the effects of this cache connection architecture doesn't necessarily have to be a bad thing.

The current Firebird 2.0.3 Classic Server is an excellent server. Classic can be a good choice if the host server has multiple CPUs and plenty of RAM. Should you have sufficient working memory, we recommend you use the Classic Server and set the cache per user somewhat lower.

Further information regarding the Classic server can be found in the Classic Server versus SuperServer article, in the InterBase Classic architecture chapter.

SuperServer

The Firebird SuperServer has one process and multiple threads, but no SMP (Symmetric Multi-Processing), i.e. a dual-core machine. It serves many clients at the same time using threads instead of separate server processes for each client. Multiple threads share access to a single server process, improving database integrity because only one server process has write access to the database. The main advantage is however that all connected users share the database cache. If a data page has already been loaded for one user, if the second user needs to access data on the same page, it doesn't need to be reloaded a second time into the cache.

Superserver's shared page cache, properly configured, can be beneficial for performance where many users are working concurrently. On the other hand, Superserver for Windows does not "play nice" with multiple CPUs on most systems and has to be set for affinity with just one CPU.

For further information regarding the SuperServer, please refer to the Classic Server versus SuperServer article, in the InterBase SuperServer architecture chapter.

Embedded server

The Embedded server allows only one local process per database, which of course means that it is unsuitable for a web server! The Firebird 2.1 Embedded Server version provides a useful enhancement: the client library is embedded in the server, this combination performing the work of both client and server for a single attached application. Only a few files are required without installation. It mainly consists of a slightly larger fbclient.dll, which is capable of providing the database server service to all installations. It is not necessary to install or start anything. This is particularly advantageous, for example, in the following situation:

You have an accounting application in the old 1997 version that you need to start today to view old data that was created and processed using this version. Normally you would have to search for the old version, install it, and - if for whatever reason it doesn't work anymore (or maybe you never managed to find it in the first place!) - you can't get to your data. Solution: pack your accounting application onto a DVD together with the correct Firebird embedded version. You can then start the application directly from the DVD without having to search and install anything. This is particularly useful when archiving data.

Firebird is, by the way, one of the few database systems that can read a database on a read-only medium.

For details regarding installation of the Embedded server, please refer to the Firebird Migration and Installation Guide chapter, Windows Embedded.

Firebird 2.1 new features

| Database triggers | Database triggers are user-defined PSQL modules that can be designed to fire in various connection-level and transaction-level events. |

| Global temporary tables | SQL standards-compliant global temporary tables have been implemented. These pre-defined tables are instantiated on request for connection-specific or transaction-specific use with non-persistent data, which the Firebird engine stores in temporary files. |

| Common table expressions (CTEs) | Standards-compliant common table expressions, which make dynamic recursive queries possible. |

RETURNING clause | Optional RETURNING clause for all singleton operations, UPDATE, INSERT and DELETE operations. |

UPDATE OR INSERT statement | New UPDATE OR INSERT for MERGE functionality: now you can write a statement that is capable of performing either an update to an existing record or an insert, depending on whether the targeted record exists. |

LIST() function | A new aggregate function LIST() retrieves all of the SOMETHINGs in a group and aggregates them into a comma-separated list. |

| New built-in functions | Dozens of built-in functions replacing many of the UDFs from the Firebird-distributed UDF libraries. |

Text BLOBs can masquerade as long VARCHARs | At various levels of evaluation, the engine now treats text BLOBs that are within the 32,765-byte size limit as though they were VARCHAR. String functions like CAST, LOWER, UPPER, TRIM and SUBSTRING will work with these BLOBs, as well as concatenation and assignment to string types. |

| Define PSQL variables and arguments using domains | PSQL local variables and input and output arguments for stored procedures can now be declared using domains in lieu of canonical data types. |

COLLATE in PSQL | Collations can now be applied to PSQL variables and arguments. |

| Windows security to authenticate users | Windows "Trusted User" security can be applied for authenticating Firebird users on a Windows server platform host. |

CREATE COLLATION command | The DDL command CREATE COLLATION has been introduced for implementing a collation, obviating the need to use the script for it. |

| Unicode collations anywhere | Two new Unicode collations can be applied to any character set using a new mechanism. |

| New platform ports | Ports to Windows 2003 64-bit (AMD64 and Intel EM64T) Classic, Superserver and Embedded models; PowerPC, 32-bit and 64-bit Intel Classic and SS ports for MacOSX. |

| Database monitoring via SQL | Run-time database snapshot monitoring (transactions, tables, etc.) via SQL over some new virtualized system tables. Included in the set of tables is one named MON$DATABASE that provides a lot of the database header information that could not be obtained previously via SQL: such details as the on-disk structure (ODS) version, SQL dialect, sweep interval, OIT and OAT and so on. It is possible to use the information from the monitoring tables to cancel a rogue query. |

| Remote interface | The remote protocol has been slightly improved to perform better in slow networks once drivers are updated to utilise the changes. Testing showed that API round trips were reduced by about 50 percent, resulting in about 40 per cent fewer TCP round trips. |

Please also refer to the Firebird 2.1 Release Notes.

Note: If you are upgrading from an older Firebird version to the new 2.1 version, it is also important that you upgrade all your clients accordingly. The Firebird 2.1 client can communicate much more effectively with the Firebird 2.1 server, which can mean performance improvements of up to 40%!

Red Database SuperClassic server

The Red Soft Corporation has already developed a SuperClassic server. The Red Database 2.1.0 engine is based on Firebird 2.1 and also contains the following new features above the Firebird 2.1 base line:

- Multi-threading architecture - SuperClassic server

- External stored procedures

- Stored procedures and triggers debugger

- Full-text search

- Improved security subsystem, providing the full set of tools for:

- authentication,

- fine-grained authorization controls, at row and field level,

- keeping detailed audit trail for users access to data,

- system and data integrity checking,

- analysis of security incidents.

New features in the security subsystem include:

- Integrated cryptographic module

- Complete DDL operations access control

- Complete DML operations access control

- The roles assigned to a user have cumulative effect on his (her) access rights

- The roles can be defined globally for the server

- The support for access policies

- Multi-factor authentication

- Audit system with capability to save log in binary format and analyze it via SQL statements

- Access controls for database service functions (backup, consistency validation, etc.)

- Security clean-up for deallocated memory

- Integrity checking for server files and data protection facilities

- Special tools for metadata and data integrity checking using digital signature

The integrated role for security and system administration enables you for example to give someone, who neither the database owner nor the SYSDBA, the ability to create procedures.

This database (Red Database Community Edition) can be downloaded free of charge from http://www.red-soft.biz/en. If you wish to distribute this as part of your own software package, you will however require a Distribution License. The license fee for unlimited licenses is currently around EUR 1,500 annually including support. For further information please mail info@ibexpert.biz.

Firebird 3.0 - the best of both worlds

Firebird 3.0 is intending to combine the advantages of both Classic and SuperServer: a SuperServer with SMP (Symmetric Multi-Processing) support. It will offer the shared cache, at the same time using multiple CPUs.

See also:

Firebird Classic Server versus SuperServer

Firebird 2 Quick Start Guide: Classic or Superserver

Installing on Linux

Firebird 2 Migration & Installation: Choosing a server

Configuring Firebird

Before we take a look at the two Firebird configuration files, we would like to point out that the most frequently asked question regarding these subjects is, "I've changed the parameter in the firebird.conf/aliases.conf and nothing's happened!" The simple solution is: remove the hash (#)! It's the symbol used for commenting.

aliases.conf

An alias is a pseudonym for the database connection string and database file name. The full connection string usually consists of the server name (or localhost) followed by the drive and path to the database file, with the database file name concatenating on the end. This informs the client, where he needs to send his data packets and access server data.

For security reasons it is not always desirable for each client user to see the full connection string, and there are obvious problems which arise when the database is moved to another drive or machine, as each client has to be informed of the new connection string. For these reasons it is recommended to give databases an alias name. All alias names are set in aliases.conf. There are no syntactical restrictions to the naming of aliases.

Using an alias, users are not able to see where the database really is and, should it be relocated, the new connection string only needs to altered once in the aliases.conf. Let's look at an example:

The alias db1 should refer to the database name, db1.fdb.

db1=c:\path\db1.fdb

This user alias has been specified for the database server. The client can also define such an alias connection when registering the database or subsequently in the IBExpert's Database Registration Info. The connection string is:

servername:aliasname

If the user wishes to connect to db1, he simply needs to enter

localhost:db1

in the Database Alias field. The aliases.conf file shows the server which database the client wishes to connect to.

When working with IBExpert, a database alias can be specified when registering the database. Refer to Register Database / Alias for further information.

Resolving the XP Windows System Restore problem

Windows XP has the unfortunate tendency to consider all files with the .GDB suffix to be a constituent of the Windows System Restore. This means that when you try to open your DB1.GDB, XP (default setting) first decides to make a copy of the file (just in case you need to restore it at some point), not allowing you access until it's completed. In the case of large database files, you can imagine how long this can take!

If you don't want to rename your database files just to suit Microsoft, then simply create an alias:

C:\db1.gdb = C:\db1.fdb

firebird.conf

Possible file locations are set in firebird.conf. The full set of firebird.conf parameters are described in detail in the firebird.conf file. The server needs to be restarted following any changes made in the firebird.conf for them to become valid. The following describes briefly the most important parameters:

RootDirectory

If you are using several installations of Firebird servers, use the RootDirectory parameter to specify where the active Firebird server can be found.

DatabaseAccess

An alias entry needs to exist. If a path is entered here, database files may only be stored in this path or its subdirectories.

DatabaseAccess = NONE

means that only file locations set in aliases.conf are available. The server can't access any other entries. This is a great security feature, because even when someone has a user name on the database server, he cannot create a database file, because it is not possible to specify an alias remotely.

ExternalFileAccess

Firebird has a mechanism enabling a table to be created externally, (i.e. not in the database), using the command:

create table external file

In order to allow such external files it is necessary to explicitly activate the ExternalFileAccess parameter. Options include: None, Full or Restrict. If you choose Restrict, provide a ';'-separated trees list, where external files are stored. Default value None disables any use of external files on your site.

UdfAccess

User-defined functions are used in Firebird to complement and extend the Firebird server's language. This parameter specifies where UDFs can be found. They are usually to be found in the subdirectory /UDF, and should - if possible - remain there. UdfAccess may be None, Full or Restrict. If you choose Restrict, provide a ';'-separated trees list, where UDF libraries are stored.

TempDirectories

Here you can specify where temporary files should be created. When the Firebird server receives a query including ORDER BY or similar, without an index, then Firebird has to sort the data somewhere. Firebird has a so-called Sort Buffer, which is principally a memory area where such sorting processes can be performed. If however you have a sorting operation that is 10 GB, Firebird needs somewhere to do this. From a certain size, when the Sort Buffer is no longer sufficient, it moves the job out into a temporary file, and you can specify here where these temp files should be.

Because of the intense batting backwards and forwards, you need to know where your temp file is in relation to your database. As soon as you need a temp file, it's because you don't have enough RAM or you've exceeded your internal limits. By its very nature, it's going to be reading things from the database cache and wanting to put things in the temp directory. So keeping those on separate disks will make a big difference. And you want to know where they are, to see how big they're getting.

What do you do if your database crashes mid-sort file? The temp files just sit there. So if you your system hangs and you need to reboot, you could suddenly have a lot of temp files. While they're being used they have a handle on them, so if you are allowed to delete or rename them, then it's fine because they're orphans.

The default value is determined using FIREBIRD_TMP, TEMP or TMP environment options. Every directory item may have optional size argument to limit its storage, this argument follows the directory name and must be separated by at least one space character. If the size argument is omitted or invalid, then all available space in this directory will be used.

Examples

TempDirectories = c:\temp;d:\temp

or

TempDirectories = c:\temp 100000000;d:\temp 500000000;e:\temp

DefaultDbCachePages

This influences the cache by setting the number of pages from any one database that can be held in the cache at once. By default, the SuperServer allocates 2048 pages for each database and the Classic allocates 75 pages per client connection per database. Before altering either of these values please refer to Page size and Memory configuration.

RemoteServiceName

This is the TCP Service name to be used for client database connections. It is only necessary to change either the RemoteServiceName or RemoteServicePort, not both. The order of precendence is the RemoteServiceName (if an entry is found in the services. file) and then the RemoteServicePort.

You don't need to change this if it's your only install.

E.g. RemoteServiceName = gds_db

RemoteServicePort

This is the TCP Port number to be used for client database connections. It is only necessary to change either the RemoteServiceName or RemoteServicePort, not both. The order of precendence is the RemoteServiceName (if an entry is found in the services. file) then the RemoteServicePort.

You don't need to change this if it's your only install.

E.g. RemoteServicePort = 3052

RemoteBindAddress

Allows incoming connections to be bound to the IP address of a specific network card. It enables rejection of incoming connections through any other network interface except this one. By default, connections from any available network interface are allowed.

CpuAffinityMask

This parameter only applies to SuperServer on Windows.

In an SMP (Symmetric Multi-Processing) system, this sets which processors can be used by the server. The value is taken from a bit map in which each bit represents a CPU. Thus, to use only the first processor, the value is 1. To use both CPU 1 and CPU 2, the value is 3. To use CPU 2 and CPU 3, the value is 6. The default value is 1. It doese make sense however to allow Firebird to use at least 2 CPUs, so that if the traffic on one of them gets halted due to, for example, a query going wrong, all other traffic can use the second CPU.

CpuAffinityMask = 1

See also:

Firebird 2.1 Release Notes: New configuration parameters and changes

Download and install InterBase®

This guide will lead you through the process of downloading and installing the free trial version of InterBase. For those having purchased InterBase®, the installation routine is the same (just skip the download instructions).

The current InterBase® trial version (at the time of writing this) was version 2007. It is a full InterBase server version and runs for 90 days. It can be downloaded free of charge from http://www.codegear.com/downloads.

Click on InterBase, and then scroll down the list of Server versions and select the one you require.

Click the Download button and agree to comply with the Export Controls, to download the InterBase software to your hard drive.

You will then need to enter your name, email and basic company information to receive your activitation certificate. You will need to activate InterBase 2007 Server Trial for Windows, otherwise it won't run. Fill out the online form and your activation information will be immediately mailed to your inbox. If you already have the InterBase 2007 Server Trial for Windows on disc, you do not need to download it, but you will still need to request activation here.

You must save the emailed activation file to your InterBase /license directory before you can use InterBase. If the server won't start, your activation file may not have been saved correctly. The email provides complete instructions.

Extract the downloaded ZIP file (for example in Windows to C:\Program Files\Interbase) and start the relevant install_[platform].exe file.

To start the installation simply double-click the install executable.

For those installing InterBase for the first time, we recommend first clicking the InterBase Setup Information button (or open IBSetup.html in the installation package to open: Installation, Registration, and Licensing Information for Borland® InterBase® 2007.

The Install Borland InterBase Server button guides you through the installation: Check the software to be installed, and follow the prompts to accept the license agreement. Confirm whether you wish to use Multi Instances; if you do, change the Instance Name and TCP Port from the default values, gds_db and 3050. Then confirm which options you wish to install, confirm the directory to be installed into or select a directory of your choice. After prompting a couple more times, InterBase is then installed.

The Registration Wizard then automatically starts for those who have purchased InterBase. Users of the Trial version should follow the instructions in the Product Registration email from CodeGear.

What is IBExpert?

Visit our product site for further details.

Test IBExpert for yourself - simply download the Trial Version (setup_trial.exe). These files are fully functional versions in the last stable build. They run for 45 days without any restrictions.

Alternatively purchase a full registered IBExpert version; again details can be found on our website.

Download and install IBExpert on Windows

Customer Version

IBExpert can be downloaded from the IBExpert download pages. There are a number of versions - please refer to IBExpert licenses for further information.

If you are installing an IBExpert version update over an old IBExpert version (before December 2007) you will need to uninstall older versions first, as we have updated the IBExpert installer. You can do this simply and quickly by selecting all IBExpert products in the Windows Control Center / Add or Remove Software.

All registered databases are stored in the directory, C:\Documents and Settings\%user%\Applicationdata\HK-Software\IBExpert or, if used, in the User Database. Please backup these files before uninstalling.

The download page on the IBExpert website offers a number of download options:

Registered customers should click on the Customer Download link. Enter your user name and the password supplied with the registration confirmation. The Username is a combination of key A and key B (for example 1234567887654321 when key A is 12345678 and key B is 87654321). The Password is always ibexpert.

The current IBExpert version can be found by scrolling down to setup_customer.exe: these files include the unlimited use of the full version. These setup_customer.exe files comprise the full IBExpert Developer Studio versions.

Customers installing their first fully licensese IBExpert customer version will be asked to register the product the first time the application is started. Please check that the computer name and company name which appears in the Registration window is the same as the computer name and company name quoted on the license form. Then simply enter Key A and Key B and click the Register button. You should receive a confirmation message stating that your IBExpert version has been successfully registered. Customers with site or VAR licenses need to copy the license file into the IBExpert directory before starting IBExpert for the first time in order to avoid this key request.

Personal Edition

Those wishing to download the free Personal Edition (for more information please refer to IBExpert Personal Edition), click on download free to register at the IBExpert Download Center:

Once you have registered you will be sent a password by e-mail which allows you access to the IBExpert Personal Edition download file. You simply need to login, click the Download tab to switch to the Download page, and select the file required.

The Install Wizard offers those IBExpert Developer Studio Tools available in the Personal Edition:

Trial Version

For those wishing to download the IBExpert Trial Version, go to Download Trial and click Download to download the setup_trial.exe file.

Installation

Double-click the EXE file to start the installation. The IBExpert Customer and Trial versions both offer the full selection of all IBExpert Developer Studio Tools:

Following confirmation of the License Agreement and confirmation or alteration of the installation directory, IBExpert is automatically installed and started.

To alter the IBExpert interface language, use the IBExpert menu Options / Environment Options. Use the drop-down list found under Interface Language to select the language of your choice. This dialog also offers default options for the specification of the database version and client library.

Should you encounter any problems whilst attempting to download IBExpert, please send an e-mail (in either the English or German language) to register@ibexpert.com, with a detailed error description.

To keep you informed of all new developments, we recommend you retain IBExpert Direct which is automatically activated in IBExpert. Further information regarding IBE Direct and adjusting the default settings can be found in the IBExpert Help menu item, IBExpert Direct.

We also recommend you subscribe to the IBExpert newsletter, which informs you of new developments and new versions (including documentation of all new features). Simply send a mail to news@ibexpert.com entering SUBSCRIBE in the subject heading.

See also:

Select interface language

Registering a database (using the EMPLOYEE example)

Working with a database

Where to go from here

Installing IBExpert under Linux

The following describes the IBExpert installation under ubuntu 8.1.0.

Details regarding the installation of IBExpert under Conectiva Linux version 10 can be found in the database technology article, Using IBExpert and Delphi applications in a Linux environment, accessing Firebird.

Installing IBExpert under Linux ubuntu 8.1.0

Install Wine

You will need to open a shell to install Wine (the graphical interface cannot be used because you need to be able to log in as root to install these tools). Run the installation as root or through kdesu or sudo programs. This article uses sudo commands in its examples.

Open the Konsole (found under Applications/System Tools), and log in as superuser:

sudo su

entering the password when prompted ([sudo] password for xxx:).

Firstly you need to download the newest Wine version which can be found at:http://winehq.org/site/download. At http://winehq.org/site/download_deb you can find the most up-to-date version for Debian derivatives, including ubuntu.

Using ubuntu 8.1.0 the following command automatically adds the newest Wine version to the sources:

sudo wget http://wine.budgetdedicated.com/apt/sources.list.d/hardy.list -O /etc/apt/sources.list.d/winehq.list

Then you simply need to enter:

sudo apt-get install wine & sudo apt-get update

to install the newest version.

Don't run IBExpert before doing the next steps. If you have done, you will probably need to delete the .wine directory.

Upon completion of the installation enter:

winecfg

This will open a configuration dialog, which can immediately be closed again. This command automatically creates a .wine folder in the Home directory.

The next step entails the execution of the following two commands which run a script in order to obtain a native DCOM98:

wget http://kegel.com/wine/winetricks

and

sh winetricks dcom98

(alternative site: http://wiki.winehq.org/NativeDcom)

Now both the msls31.dll and the riched20.dll need to be copied into the .wine/drive_c/windows/system32 directory. These files can be found, for example, in a Windows system.

Finally an entry needs to be added to the windcfg file: riched20.dll should be entered on the Libraries page under New override for library:. Click on the Add button and you should see riched20.dll appear in the list below:

You should now be able to run most Windows applications.

Install IBExpert under Wine

Before installing IBExpert, open the Wine configuration and, on the Applications page, select Windows 98 from the Windows Version list:

This is only necessary for the IBExpert installation, and can be changed back immediately to the Windows version of your choice as soon as IBExpert has been installed.

Now you need to enter the command:

wine <IBExpert InstallationFile.exe>

to install IBExpert. The installation procedure runs in exactly the same way as described for Windows (refer to Download and install IBExpert under Windows.

Upon completion of the installation, you only need to make one adjustment. Under the IBExpert Options menu item, Environment Options you need to specify the Default Client Library path to the fbclient.dll or gds32.dll. This can be found either in a Windows installation or the Windows Server Version installed with Wine without the developer and server components (in this case you will find the gds32.dll or fbclient.dll (if you're not sure which client library you need, install both) under: ~/.wine/drive_c/windows/system32). Please note that all names and extensions must be written in lower case.

Then it only remains to reset the Windows Version in the Wine configuration to the version of your choice and IBExpert can now connect to any Firebird (or InterBase) server.

By the way, under Options / Environment Options you can specify the language for IBExpert: select the language of your choice from the drop-down list, Interface Language (details can be found here: Download und Install IBExpert under Windows).

If you are new to IBExpert, please refer to the IBExpert documentation chapters, Registering a database , Working with a database, and Where to go from here to help you get started.

Install Firebird under Linux

If you are not accessing remotely to a Firebird database already installed on another machine, you will need to install Firebird locally on your own computer. Firebird 2.x (SuperServer for Linux x86 as a compressed tarball) can be downloaded from the official Firebird website: http://firebirdsql.org.

Then go to the Download directory and extract the package using:

tar -xf FirebirdSS-2.0.1.*

Now go to the extracted directory and install the server as root:

sudo sh install.sh

You will of course need a directory to store the databases. The example below uses /srv/firebird:

sudo mkdir /srv/firebird

sudo chown firebird:firebird /srv/firebird

In order to connect from the local machine to the server, you will need to specify the following in IBExpert in the Database Registration:

server: remote

servername:localhost (oder 127.0.0.1)

... still undergoing testing and amendments.

See also:

Using IBExpert and Delphi applications in a Linux environment, accessing Firebird

Registering a database (using the EMPLOYEE example)

Working with a database

Where to go from here

Environment Options

IBExpert Personal Edition

The IBExpert Personal Edition is a free version, offering new users the chance to get acquainted with IBExpert at their own pace. It is however somewhat limited in its functionality, and does not include the following features:

These features can be viewed and tested in the IBExpert Trial Version.

To download the IBExpert Personal Edition you will need to register in the IBExpert Download Center: http://www.ibexpert.com/downloadcenter.

Enter a valid e-mail address to receive your personal password, allowing you access to the the IBExpert Download Center:

Simply follow the directions for new and existing users, as detailed in the dialog.

Once you have received your password you can login into the IBExpert Download Center and download either the IBExpert Personal Edition or the IBExpert Trial Version:

The IBExpert Download Center is the first application created with IBExpertWebForms, a technology which was introduced as a full Trial Version in IBExpert version 2007.06.05.

You can use this registration information to also view and download the IBExpert documentation, with over 900 pages of IBExpert, Firebird and InterBase knowledge, in PDF format. Go to http://www.h-k.de/docu/ or the Download page in the Download Center.

Further information regarding the free IBExpert Personal Edition can be found on our website.

IBExpert Server Tools

IBExpert Server Tools includes IBEScript.exe, IBEScript.dll, IBExpertBackupRestore, IBExpertInstanceManager, IBExpertJobScheduler and IBExpertTransactionMonitor. This product does not include the IBExpert IDE!

These tools are vital for typical administration tasks, for example, importing or exporting data from or to any ODBC data source such as MS SQL, Oracle, DB2, IBM iSeries, Excel, Access and so on. Programming a data interface based on this technology between any InterBase/Firebird and ODBC platform takes just minutes.

All functionalities of the IBEBlock scripting language are also available on fully licensed Server Tool computers, for example, metadata and data comparison, multiple database access, etc.

For details of the various license models please refer to the IBExpert website.

Download and install IBExpert Server Tools

The licensed IBExpert Server Tools products can be downloaded from our customer download area. Please refer to the documentation concerning the download of the IBExpert customer version for details.

The installation occurs quickly an easily with the IBExpert Install Wizard. Again, refer to the IBExpert Installation documentation for details - the only difference is the list of components to be installed.

Registering a database (using the EMPLOYEE example)

In order to administrate a database using IBExpert, it is first necessary to register the database. For detailed information regarding database registration, please refer to Register Database.

Here we will briefly show how to register a database, based on the sample EMPLOYEE database supplied with both Firebird and InterBase.

First open the Register Database dialog, using the IBExpert menu item Database / Register Database, right-clicking in the Database Explorer (left-hand panel) and selecting the Register Database menu item, or using the key shortcut [Shift + Alt + R].

The Register Database dialog appears:

(1) Server: first the server storing the database needs to be specified. This can be local (localhost) or remote (see Create Database). By specifying a local server, fields (2) and (3) are automatically blended out, as they are in this case irrelevant. By specifying Remote and localhost a protocol can be specified and used even when working locally.

(2) Server name: must be known when accessing remotely. The standard port for InterBase and Firebird is 3050. However this is sometimes altered for obvious reasons of security, or when other databases are already using this port. If a different port is to be used for the InterBase/Firebird connection, the port number needs to be included as part of the server name (parameter is server/port). For example, if port number 3055 is to be used, the server name is SERVER/3055. This is sometimes the case when a Firewall or a proxy server is used, or when another program uses the standard port. For using an alias path for a remote connection, please refer to the article remote database connect using an alias.

(3) Protocol: a pull-down list of three options: TCP/IP, NetBEUI or SPX. TCP/IP is the worldwide standard (please refer to Register Database for more information).

(4) Server versions: this enables a server version to be specified as standard/default from the pull-down list of options. This is necessary for various internal lists. For example, possible key words can be limited this way.

If you're not sure of the Firebird version of your database, register the database initially with any server version. Once registered, connect the database and, when the database name is marked in the DB Explorer, you can view the actual server version in the SQL Assistant. Your database registration can then be amended using the IBExpert Database menu item, Database Registration Info.

(5) Database File: by clicking on the folder icon to the right of this field, the path can easily be found and the database name and physical path entered. For example for Firebird:

C:Programs\Firebird\Firebird_1_5\examples\EMPLOYEE.FDB

for InterBase:

C:Programs\Interbase\examples\EMPLOYEE.GDB

If no database alias has been specified, the database name must always be specified with the drive and path. Please note that the database file for a Windows server must be on a physical drive on the server, because InterBase/Firebird does not support databases on mapped drive letters.

(6) Database Alias: descriptive name for the database (does not have to conform to any norms, but is rather a logical name). The actual database name and server path and drive information are hidden behind this simple alias name - aiding security, as users only need to be informed of the alias name and not the real location of the database. For example:

Employee

(7) User Name: the database owner (i.e. the creator of the database) or SYSDBA.

(8) Password: if this field is left empty, the password needs to be entered each time the database is opened. Please refer to Database Login for further information. The default password for SYSDBA is masterkey. Although this may be used to create and register a database, it is recommended - for security reasons - that this password be changed at the earliest opportunity.

(9) Role: an alternative to (7) and (8);can initially be left empty.

(10) Charset (abbreviation for Character Set): The default character set can be altered and specified as wished. This is useful when the database is designed to be used for foreign languages, as this character set is applicable for all areas of the database unless overridden by the domain or field definition. If not specified, the parameter defaults to NONE (the default character set of EMPLOYEE.FDB), i.e. values are stored exactly as typed. For more information regarding this subject, please refer to Charset/Default Character Set. If a character set was not defined when creating the database, it should not be used here.

(11) Do NOT perform conversion from/to UTF8: (new to IBExpert version 2009.06.15) When working with a database using UTF8 character set, IBExpert performs automatical conversion from UTF8 to Windows Unicode (for example, when a stored procedure is opened for editing), and backwards (when a stored procedure is compiled). This applies to Firebird 2.1 and 2.5 databases. For other databases you need to enable this behavior manually (if you really need this!) by flagging this checkbox.

(12) Trusted authentication: If Firebird version 2.1 or higher has been specified under (4) Server versions, an extra check-box option appears for the specification of Trusted authentication:

(13) Additional connect parameters: input field for additional specifications. For example, system objects such as system tables and system-generated domains and triggers can be specified here. They will then automatically be loaded into the Database Explorer when opening the database alias.

(14) Path to ISC4.GDB & Client library file: The Path to ISC4.GDB (only appears if older versions of Firebird or InterBase have been specified under (4)) can be found in the InterBase or Firebird main directory. This database holds a list of all registered users with their encrypted passwords, who are allowed to access this server. When creating new users in earlier InterBase versions (<6), IBExpert needs to be told where the ISC4.GDB can be found. Since InterBase version 6 or Firebird 1 there is a services API. So those working with newer versions may ignore this field! If Firebird 2.0 or higher has been specified under (4) the client access library, fbclient.dll location is displayed under Client library file.

(15) Always capitalize database objects' names (checkbox): this is important as in SQL Dialect 3 entries can be written in upper or lower case (conforming to the SQL 92 standard). InterBase however accepts such words as written in lower case, but does not recognize them when written in upper case. It is therefore recommended this always be activated.

(16) Font character set: this is only for the IBExpert interface display. It depends on the Windows language. If an ANSI-compatible language is being used, then the ANSI_CHARSET should be specified.

(17) Test connect: the Comdiag dialog appears with a message stating that everything works fine, or an error message - please refer to the IBExpert Services menu item, Communication Diagnostics for further information.

(18) Copy Alias Info: alias information from other existing registered databases can be used here as a basis for the current database. Simply click on the button and select the registered database which is to be used as the alias.

(19) Register or Cancel: after working through these options, the database can be registered or cancelled.

Details of further options (listed in the left-hand panel in the Register Database window) may be found under Register Database (individual subjects are listed on the right of the screen in the upper gray panel in the online documentation). These are not compulsory, and may be altered at a later date, if wished, using the Database / Database Registration Info menu item.

Following successful registration of EMPLOYEE database, it will appear in the on the left-hand side. Simply double-click on the database name to connect to it.

Working with a database

A registered database can be connected simply by double-clicking on the database name in the DB Explorer.

Alternatively use the IBExpert menu item Database / Connect to Database, click the Connect Database icon in the toolbar, or use the key shortcut [Shift + Ctrl + C]. The database and its objects appear in a tree form in the DB Explorer:

For further information with regard to the details displayed in the DB Explorer, please refer to Register Database / Additional and the IBExpert Options menu item, Environment Options / Tools for a choice of alternatives regarding the DB Explorer.

The individual database objects may be opened by double-clicking on the object name.

The IBExpert Screen chapter provides assistance regarding the navigation of IBExpert. Options and templates may be adapted and customized using the IBExpert Options menu. Other important IBExpert features can be found in the IBExpert Tools menu and IBExpert Services menu.

The IBExpert online documentation provides not only a comprehensive documentation for using IBExpert, but also offers many tips for those new to database development. The online documentation can be viewed under http://ibexpert.net/ibe/pmwiki.php?n=Doc.IBExpert. The documentation includes a Search function and a Recent Changes function. Or you can download the complete documentation as a PDF file onto your hard drive (use your registration details from the IBExpert Download Center to access: http://www.h-k.de/docu/).

And if you can't find an answer to your problem there, please mail us at documentation@ibexpert.com.

See also:

Database Objects

IBExpert Screen

SQL Editor

IBExpert Help menu

IBExpert screen

When IBExpert is started, the standard IBExpert screen appears as follows:

The standard IBExpert settings display a large working window, with the menu (2) and toolbars (3) at the top of the screen, a windows bar (6) and status bar (7) at the bottom, and the DB Explorer (4) on the left, divided from the SQL Assistant (lower left) (5) by a splitter.

The IBExpert View menu can be used to blend the DB Explorer, status bar, windows bar and toolbars in or out.

Further visual options can be specified by the user in the IBExpert Options menu.

IBExpert Splash screen

The IBExpert splash screen appears when IBExpert is started. It displays the IBExpert logo and version number.

The splash screen may be disabled if wished, by checking the Don't Show Splash Screen option, found under Options / Environment Options on the initial Preferences page.

(1) Title bar

The title bar is the blue horizontal bar at the top of the main IBExpert screen, and at the top of all IBExpert editors. It displays the program or editor name on the left, and in the right hand corner there are four small icons (from left to right):

- Print (only on the IBExpert screen with the MDI Interface; with the SDI Interface it appears on the active window/editor)

- Minimize IBExpert / Editor window

- Maximize IBExpert / Editor window

- Exit IBExpert / Exit Editor

(2) Menu

The IBExpert menu bar can be found at the top of the screen:

The individual menu headings conceal drop-down lists, opened simply by clicking on one of the words with the mouse or by using [Alt + {underlined letter}], e.g. the Database menu can be started by clicking with the mouse on the word database, or by using the key combination [Alt + D].

The most frequently-used menu items can also be found in the toolbars, represented as icons, or using the right mouse button in either the DB Explorer or the main editors. Alternatively keyboard shortcuts can also be used.

Keyboard shortcuts / hotkeys (Localizing Form)

Many menu items can also be executed using so-called keyboard shortcuts (a combination of keys). Where available, these are listed to the right of the menu item name in the menus, and when the cursor is placed over a toolbar icon.

[Ctrl + Shift + Alt + L] works in almost all IBExpert forms and calls the Localizing Form, where you can refer to a complete list of all available shortcuts relevant to the active dialog. It is possible to specify your own shortcut for opening the Localizing Form in the IBExpert Options menu item, Environment Options, under Localize form shortcut.

Using this dialog it is possible to alter the Item text (please use the IBExpert Tools menu item, Localize IBExpert to translate menu items into your own language), and specify your own hotkeys/shortcuts in the bottom right-hand field. Do not forget to save your changes before closing!

(3) Toolbars

The toolbar is a row of symbols (called icons), representing different menu items. By clicking on an icon with the mouse, a pre-defined menu item is executed. This shortcut is ideal for those operations performed often, as they save the necessity of repeatedly searching through the main menus.

Toolbars can be found in IBExpert in the main window and in the main editors. As with most Windows applications the toolbars are positioned as standard in a horizontal row directly below the main menu in the upper part of the window, or in the upper part of the dialogs. They can however be positioned as wished within the window (main or dialog) using drag 'n' drop.

When the cursor is placed over an icon the respective menu command and keyboard shortcut are displayed.

The user can specify which toolbars he wishes to be displayed in the main IBExpert window using the menu item View / Toolbars.

The individual icons can be specified using the Customize... menu item, opened by holding the mouse over the toolbar and right-clicking.

The Customize Tools page displays a list of the toolbar options available. User-defined toolbars can be created here if wished, or reset to the original IBExpert toolbar.

The Command page enables the different menu options listed under Categories to be selected, and the icons (in the right-hand list) added or removed to toolbars using drag 'n' drop.

The Options page allows certain menu and icon options to be checked if wished.

The Editor toolbars can be customized by clicking the downward arrow to the right of the toolbar, and using the menu item Add or Remove Buttons to check the relevant icons in the menu list, or using the above method by selecting the last menu item Customize...

Should you ever experience problems with any of the toolbars in IBExpert, simply delete IBExpert.tb, found in Documents and Settings\<user>\Application Data\HK-Software\IBExpert and then restart IBExpert. A "lost" toolbar can be made visible again by altering the parameter Visible=0 to Visible=1 in IBExpert.tb, for example:

[TSQLScriptForm.bm.Bar0]

Caption=SQL Editor

...

Visible=1

The individual IBExpert toolbars are described in detail in the Addenda.

Icons

Icons are a principal feature of graphical user interfaces. An icon is a small, square graphical symbol.

Each icon represents a menu item, the description of which appears, when the mouse is held over it. Icons can be used as shortcuts by those users who work mainly with a mouse (as opposed to the keyboard).

Icons are usually grouped together in a toolbar, which offers a series of symbols all relating to a certain subject, e.g. new database object, grants etc.

(4) Database Explorer

The IBExpert Database Explorer is a navigator which considerably simplifies the work with InterBase/Firebird databases and database objects.

The Database Folder displays all registered databases at a glance. A database connection can be made simply by double-clicking on the database name.

Each connected database is displayed in a logical tree form, including a list of all the database objects created in this database. If the database contains objects of some of these types, the name of the respective object branch appears in bold. The blue number in brackets behind the object caption shows the number of objects already created for this database.

Detailed information regarding the highlighted database object can be viewed in the SQL Assistant (below the DB Explorer).

The object tree branches can be expanded or reduced by double-clicking the object heading or clicking on the"+" or the "-" sign to the left of these headings (alternatively use the "+" and "-" keys to open a highlighted object heading). The individual objects themselves can be opened with a double-click or by pressing the [Enter] key.

The object description can be seen to the right of the object name, provided a description was inserted at the time of creation, and providing the DB Explorer is opened wide enough (the width of the DB Explorer can be expanded or reduced by dragging the right-hand splitter with the mouse).

Should you experience any problems with double-click expanding, or your object descriptions are not displayed at all, please check the IBExpert Options menu item Environment Options under the branch, DB Explorer, to ensure that these options have been checked. It is also possible to specify color display here for system objects, the Database Folder and inactive triggers. And since IBExpert version 2007.07.18, the tab position of the Database Explorer pages (top, bottom, left or right) can be also defined here.

The contents of the Database Explorer can be refreshed using [F5].

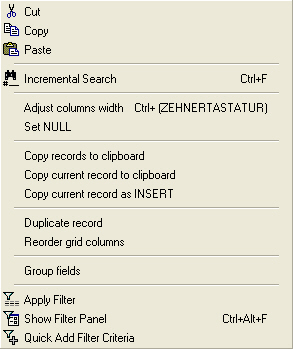

DB Explorer right-click menu

When a database, the object captions or the objects themselves are highlighted, the DB Explorer context-sensitive menu can be opened by right-clicking the mouse.

Using the control panel and right mouse button many basic metadata and data operations can be performed directly from the DB Explorer, such as creating, editing and dropping a database and its objects. Multi-select operations may also be allowed in certain situations, for example, it is possible to unregister more than one database at the same time, or activate/deactivate only selected procedures/triggers by simply selecting the required SP/triggers holding the [Ctrl] or [Shift] keys and choose the Deactivate/Activate item in the DB Explorer context menu. And several selected objects can be autogranted privileges at the same time.

The database/folder nodes can be sorted in alphabetical order (ascending or descending), using the menu item Sort child nodes alphabetically.

System indices (i.e. those indices created by Firedatabird/InterBase) can be displayed (or their display disabled) using the menu item, Database Registration Info / DB Explorer / Additional / Show System Indices.

InterBase 7.5 embedded user authentication is also supported. There is a separate node for embedded users in the Database Explorer. It is possible to create, alter and delete embedded users using the DB Explorer context menu.

When a table or view is selected, the right-click context-sensitive menu item, Show data... immediately opens the Table editor's Data page. The default shortcut for this feature is [F9]. The menu item, Create SIUD procedures is offered when a table is selected, and can be used to directly create a procedure from the table's DLL code. Please refer to the Create procedure from table for details of this feature.

IBExpert version 2008.02.19 introduced the new menu item, Apply IBEBlock to selected object(s). This feature is based on IBEBlock functionality and allows you to create your own set of code blocks to process selected object(s). Inplace debugging is available.

Firebird 2.0 blocks, IBEBlocks and IBEScripts which are stored in registered databases or in the User Database can be started from the DB Explorer, by using the relevant context-senstive menu item, when a script is highlighted, or by opening the script (double-click to open the Block Editor) and executing with [F9].

The text input field at the top of the DB Explorer (directly underneath the tabs) can be used to filter object names, e.g. to search for an object, EMP, simply type EMP. If EMP* or EMP% is typed, IBExpert displays all objects beginning with EMP; for an object ending in EMP, type *EMP or %EMP. To display objects which have a substring in their name, it is necessary to type *EMP* or ? For example, to display objects whose names start with EMP and are exactly 6 symbols in length. In this case type EMP???. Regular expressions are, of course, also allowed.

Please note that this option does not search for individual fields - if this is required, use the IBExpert Tools menu item, Search in Metadata.

Certain display default filters can be defined, under Register Database / Explorer Filters. And under Database Registration Info or Register Database, system tables, system generated domains and triggers and object details (fields, triggers etc. relating to a specific object) can be displayed or blended out as wished, by clicking on the Additional / DB Explorer branches.

The DB Explorer includes the following pages:

It is only possible to remove the Windows Manager page, if you do not need it: select the Windows page and right-click on the Windows tree. Then select the Floating Windows Manager. You can then close the Windows Manager window (click the X in the top right-hand corner). If you need to open this again use either [Alt + 0] or the IBExpert Windows menu item, Windows Manager.

Should you not be able to view the tabs of those pages you use the most, change their position using the IBExpert Environment Options menu item, Tools / DB Explorer.

[F11] blends the DB Explorer in and out. Alternatively refer to the IBExpert Menu item View / Autohide DB Explorer. This option namely enables the DB Explorer to disappear automatically when any editor is opened - allowing a larger working area. It is blended back into view simply by holding the mouse over the left-hand side of the IBExpert main window.

Drag 'n' dropping objects into code editors

Objects may be dragged 'n' dropped from the DB Explorer and SQL Assistant into many of the IBExpert Tools and Services code editor windows, for example, the SQL Editor and Query Builder. When an object node(s) is dragged from the DB Explorer or SQL Assistant, IBExpert opens the Text to insert window, which offers various relevant versions of text to be inserted into the code editor. Even the charcase of keywords and identifiers specified under Options / Editor Options / Code Insight is taken into consideration. And since IBExpert version 2009.08.17 it is possible to format code generated in this way.

If you wish to drag and drop a database node into the editor, for example to insert a database alias name instead of the full path and file name, simply hold down the [Ctrl] or [Shift] key when dragging 'n' dropping the node. In this case only node caption will be inserted.

IBExpert version 2008.02.19 introduced the possibility to create your own sets of statements that will be composed when you drag-n-drop object(s) from the Database Explorer into any code editor. This feature is based on IBEBlock.

Database Folder

The DB Explorer Database Folder can be used to specify a selection of databases as wished, so that it is not necessary to search through all available databases each time a specific database is required. The database folder allows a hierarchical classification of the Database Registration. This is for example useful for system vendors with many customers and databases, and simplifies, for example, the logging in to customer databases via a router.

When a database is registered, it is automatically displayed here in the folder list. Connected databases are displayed in bold, disconnected in normal type. If wished it is possible to blend out all unconnected databases using the DB Explorer right-click menu item, Hide Disconnected Databases.

A new database folder can be created in the DB Explorer by highlighting the connected database for which a folder is to be created, right-clicking and selecting New Database Folder ... (or [Ctrl + N]).

It is then possible to rename the database folder, by selecting the folder and using the right-click context-sensitive menu or [Ctrl + O]:

It is also possible to store server information (server type, server name, server version, connection protocol) and client library name for database folders.

A folder can also be deleted if no longer needed (again, using the right-click menu or [Ctrl + Del]). Please be careful when using this delete command, as IBExpert does not ask for confirmation before deleting the folder!

Project View

(This feature is unfortunately not included in the IBExpert Personal Edition.) In the DB Explorer, projects can be defined to streamline the overview of database objects currently being worked with.

Database objects within a database can be hierarchically classified (user-specified) as wished. For example, for an Accounts project, only those objects necessary for all accounting processes are included, a Sales project would include certain objects used in Accounts and also, in addition, sales-specific objects.

This is ideal for large software projects in an enterprise.

The first time a folder or object is inserted in the project tab, IBExpert asks for confirmation whether it should create certain system tables for the project page:

This only needs to be confirmed once. Following this, folders and objects can be inserted as wished using the right mouse context-sensitive menu, [Shift + Ctrl + F] or drag 'n' drop in the Inspector Page Mode, to organize databases individually and personally.

The context-sensitive right-click menu offers a number of further options:

These menu options allow new folders to be created, objects to be added to or deleted from a project (and searched for within the Explorer tree). User items may be created and copied; and the visual display customized (Show SQL Assistant, Inspector Page Mode, Hide Disconnected Databases).

Items can be sorted in alphabetical order using the menu item Sort child nodes alphabetically.

Diagrams (Database Designer)

The Diagrams page provides a Model Navigator to navigate models in the Database Designer quickly and easily.

Simply click on an object in the DB Explorer, and it is immediately marked in the main Database Designer window. Double-clicking on a selected object automatically opens the Model Options page in the Database Designer.

Please also refer to the Model Navigator in the SQL Assistant.

Windows Manager

The Windows Manager can be opened using the IBExpert Windows menu item Windows Manager, the key combination [Alt + O], or - of course - by simply clicking on the Windows tab heading directly in the DB Explorer.

In the DB Explorer, the Windows page displays a list of all open windows, and allows the user to change quickly and easily from one window to the next by simply clicking on the object name in the list.

The right mouse button can be used to close individual or all windows, or to find the selected object in the DB Explorer database tree.

The Windows Manager can also be "separated" from the DB Explorer and floated using the right-click context menu. The floating Window can be returned to the DB Explorer by unchecking the context menu-item Floating Windows Manager.

The open windows can also be viewed and selected in the windows bar, directly above the status bar at the bottom of the IBExpert Screen.

Recent List